It was Lee Kuan Yew who said, “The acid test of any legal system is not the greatness or the grandeur of its ideal concepts, but whether, in fact, it is able to produce order and justice.” Lee Kuan Yew was reminding us that a legal system’s value isn’t in the beauty of its laws or principles on paper—it’s in how effectively and fairly it delivers real-world outcomes. Laws can be idealistic, but they only matter if they create practical order and justice for ordinary people.

It was Lee Kuan Yew who said, “The acid test of any legal system is not the greatness or the grandeur of its ideal concepts, but whether, in fact, it is able to produce order and justice.” Lee Kuan Yew was reminding us that a legal system’s value isn’t in the beauty of its laws or principles on paper—it’s in how effectively and fairly it delivers real-world outcomes. Laws can be idealistic, but they only matter if they create practical order and justice for ordinary people.

How Does AI Interact With This Idea in Legal Systems?

AI doesn’t (currently) care about legal concepts or philosophy. It’s built to optimize for outcomes—speed, efficiency, patterns, and probabilities. This means that legal and judicial systems shaped by AI could shift from focusing on ‘ideal concepts’ (due process, precedent, human deliberation) to a system focused on measurable outputs like clearance rates, conviction rates, case backlogs, or predictive crime reduction.

How AI Could Change Global Legal and Judicial Systems—For Better or Worse

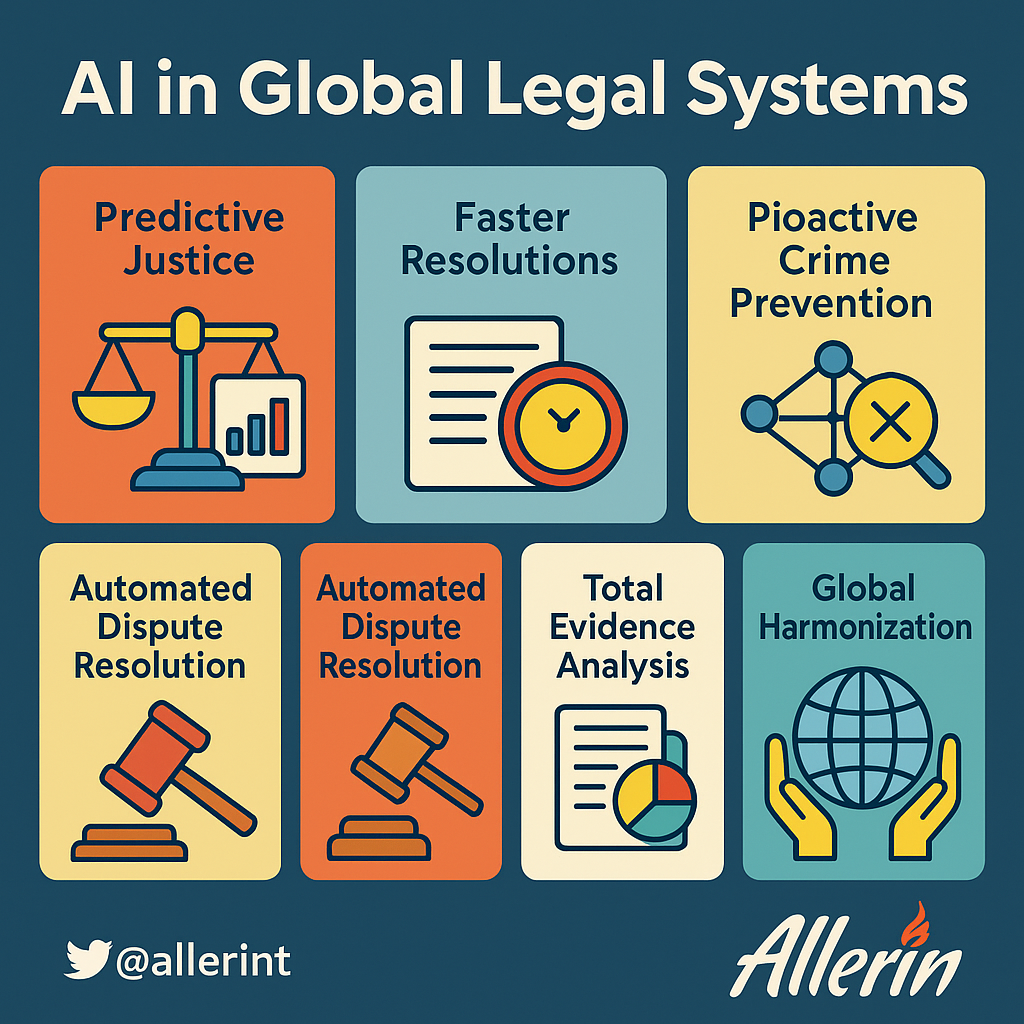

| AI-Driven Shift | How it Impacts Order and Justice |

| Predictive Justice | Courts using AI to predict case outcomes based on past data may improve consistency (order), but could sacrifice nuanced human judgment (justice). |

| Faster Resolutions | AI-powered case triage can clear backlogs, ensuring cases don’t drag on for years—delivering procedural order, but at the risk of oversimplified justice. |

| Bias Detection and Correction | AI can uncover hidden biases in sentencing or policing patterns, improving systemic fairness—delivering both order (systematic fairness) and justice (individual fairness). |

| Proactive Crime Prevention | Predictive policing aims to prevent disorder by forecasting crime—but at the risk of targeting certain communities unfairly, compromising justice. |

| Automated Dispute Resolution | AI could handle small civil disputes entirely online, removing the human element—efficient (order), but can AI truly understand justice in cases involving empathy and context? |

| Total Evidence Analysis | AI can review vast datasets no human could process—ensuring no evidence is missed (order), but can fairness exist if the algorithms are biased or opaque? |

| Global Harmonization | AI can compare legal systems across countries and propose global best practices, helping align order across borders—but whose concept of justice prevails? |

The Core Tension: AI Optimizes for Order—But Can It Understand Justice?

AI is great at producing order:

- Faster case processing.

- Efficient evidence review.

- Predictive analytics to manage court dockets.

- Risk scoring to assess flight risks or recidivism.

But justice is messier—it involves:

- Individualized consideration.

- Understanding context, culture, and emotion.

- Applying ethical frameworks.

- Ensuring that marginalized voices are heard.

Here are examples of this tension in action:

| System | AI Benefit (Order) | AI Risk (Justice) |

| Predictive Sentencing | Consistent sentencing recommendations. | Can reinforce systemic biases against minorities. |

| Legal Chatbots for Self-Help | Makes legal help accessible 24/7. | Chatbots can misinterpret complex situations, disadvantaging vulnerable users. |

| AI-Driven Risk Assessment in Bail Decisions | Faster, data-driven decisions. | Defendants treated as data points, not humans with stories. |

| Automated Evidence Review | Finds hidden connections in vast data. | Can miss nuance, humor, sarcasm, or cultural meaning. |

If AI only optimizes for system performance—clearing cases quickly, maximizing convictions, predicting crimes before they happen—it might create the illusion of a perfectly ordered society, while undermining the deeper human need for fairness, equity, and dignity.

So to create a good system with AI, what can we do? We can start by defining the system.

What Constitutes the Legal System?

The legal system can be broadly explained thus:

| System | What It Covers |

| Legal System | The whole ecosystem—laws, law enforcement (police), courts, and corrections (prisons). |

| Judicial System | Just the courts—judges, lawyers, and the process of resolving disputes and delivering justice. |

| Law Enforcement | Focuses on enforcing laws, investigating crimes, and protecting public safety—they do NOT handle trials or sentencing. |

| Corrections | Prisons, probation, and parole—they handle punishment and rehabilitation after a court decision. |

AI in the Legal System (The Whole Ecosystem)

The legal system is the umbrella—covering laws, law enforcement, courts, and corrections. AI helps tie all these pieces together, creating smarter, faster, and (ideally) more fair systems through:

- Data sharing and analysis: AI helps connect data from police reports, court filings, and prison records to create a more complete view of a case.

- Legal document automation: Across the system, AI helps generate legal documents, from arrest reports to sentencing orders, with fewer errors.

- Public access and transparency: AI chatbots and portals help citizens navigate legal processes (like filing complaints, tracking cases, or understanding their rights).

- Bias detection: AI tools can analyze patterns across the system to identify racial, gender, or socioeconomic biases—helping policymakers target reforms.

To ensure AI serves justice and not just order in the broader legal ecosystem, human oversight at every stage is crucial. Systems should include transparent auditing mechanisms where AI decisions are logged, explainable, and subject to review by human experts. Cross-agency ethics committees should evaluate how AI is trained and deployed, ensuring racial, socioeconomic, and gender biases are actively identified and corrected across police, courts, and corrections. Additionally, legal professionals need AI literacy training, so they understand the capabilities—and limits—of the technology they’re working alongside.

AI in the Judicial System (Courts)

The judicial system (courts, judges, lawyers, trials) is where AI adoption is booming. Here’s how AI helps:

| Area | AI Use Case |

| Legal Research | AI-powered research tools find case law, draft arguments, and spot weak points in legal briefs. |

| Case Management | Courts use AI to track cases, auto-schedule hearings, and predict case timelines based on similar past cases. |

| Predictive Analytics | AI can suggest likely outcomes based on judge history, case facts, and precedents, helping lawyers and clients make smarter decisions. |

| Virtual Hearings | AI tools moderate online hearings, manage speaking times, and even transcribe in real-time. |

| Evidence Analysis | AI reviews vast evidence sets (documents, emails, videos) to flag relevant information for discovery and trials. |

| Judicial Analytics | Attorneys use AI to profile judges, understanding how they’ve ruled in similar cases to fine-tune arguments. |

| Access to Justice | AI-powered legal aid chatbots guide people through filing processes, especially for self-represented litigants. |

Example: Some family courts use AI to suggest fair child support amounts based on case law and cost-of-living data.

In courts, AI should assist, not replace, judicial discretion. To mitigate risks, explainable AI (XAI) models must be used, where judges, attorneys, and even litigants can understand how AI recommendations were formed. Courts should also adopt transparency protocols, requiring any AI-generated predictions, case timelines, or sentencing suggestions to be disclosed on record—giving parties the opportunity to contest or contextualize them. Finally, ongoing algorithmic audits should test these systems for emerging biases or drift, ensuring justice isn’t compromised for speed.

AI in Law Enforcement (Police)

This is where AI meets the street, helping police investigate crimes, predict incidents, and handle evidence. This area is powerful but controversial, especially regarding bias, privacy, and surveillance concerns. Here’s how AI helps police:

| Area | AI Use Case |

| Predictive Policing | AI analyzes crime data to predict where crimes might happen next—helping allocate patrols (though this raises racial profiling concerns). |

| Facial Recognition | AI scans video feeds to match faces to criminal databases, speeding up suspect identification (but accuracy is debated). |

| Body Cam & Surveillance Analysis | AI can scan hours of body cam footage for specific events, keywords, or behaviors, helping investigations. |

| Crime Mapping & Analysis | AI tools create crime heatmaps, helping police identify patterns and link crimes across jurisdictions. |

| Evidence Review | AI scans emails, texts, and social media for relevant clues in investigations. |

| Real-time Threat Detection | AI-enhanced sensors in public spaces can detect gunshots or suspicious behaviors and alert authorities instantly. |

Example: New York City police use ShotSpotter, an AI-powered system that detects gunshots and pinpoints their location in real-time.

To ensure justice is not sacrificed in law enforcement, all AI tools used for predictive policing, facial recognition, and surveillance analysis should undergo independent bias audits before deployment—and at regular intervals thereafter. Policymakers should mandate clear usage guidelines that define how much weight AI-generated leads carry in investigations and arrests. Communities should also have oversight panels that review how predictive policing and AI surveillance technologies are impacting different demographics, ensuring transparency, accountability, and public trust.

AI in Corrections (Prisons, Probation, Parole)

In corrections, AI helps manage prison populations, assess inmate risks, and even assist with rehabilitation programs. Here’s how AI helps:

| Area | AI Use Case |

| Risk Assessment | AI predicts which inmates are likely to reoffend, influencing parole decisions (though these tools are criticized for racial bias). |

| Inmate Classification | AI helps classify inmates into low, medium, or high-risk categories, shaping security levels and rehabilitation programs. |

| Rehabilitation Matching | AI recommends education, therapy, or job training programs based on an inmate’s profile and past outcomes. |

| Monitoring Communications | AI scans inmate calls and messages for keywords indicating contraband, gang activity, or planned violence. |

| Staffing Optimization | AI helps prisons schedule staffing based on population levels, incident patterns, and risk assessments. |

| Post-Release Supervision | AI analyzes data (employment status, check-ins, location data) to flag parolees at risk of violating terms. |

Example: Some prisons use AI to identify inmates most likely to benefit from substance abuse programs, based on behavioral data and past program success rates.

AI tools in corrections—especially those influencing risk assessments, parole eligibility, and rehabilitation program matching—must be evaluated for fairness and explainability. AI risk scores should be advisory, not determinative, and final decisions should remain with human parole boards or correctional staff who can account for personal history and context. Inmates and parolees should have the right to contest AI-driven assessments, with legal aid available to help them challenge errors in their profiles. Regular audits and publicly accessible performance reports should track how these systems impact recidivism and rehabilitation outcomes, ensuring they serve justice, not just efficiency.

Common Use Case: AI for Evidence Review

One use case common across multiple actors in the legal system is evidence review. Several countries, including the USA, have already started using AI-powered evidence review systems—and they’re making a big impact across criminal investigations, court proceedings, and case management.

In the US, AI is used for:

- Digital evidence review: Processing terabytes of data from phones, computers, and cloud storage in criminal investigations.

- Video and image analysis: Analyzing surveillance footage, body cam recordings, and crime scene photos.

- Audio transcription: AI tools convert suspect and witness interviews into searchable transcripts.

In UK, AI is being used to:

- Enhance and analyze CCTV footage in real-time.

- Helps police review massive volumes of digital evidence in child exploitation and financial crime cases.

Australian police forces use AI to review forensic evidence, including:

- DNA analysis.

- Crime scene photo reconstruction.

- Digital evidence triage in cybercrime investigations.

There are huge benefits to using AI for evidence review:

| Benefit of AI in evidence review | Breaking it down |

| Speed | AI can review thousands of documents, videos, or audio files in hours instead of weeks or months. |

| Accuracy | AI tools are trained to detect relevant evidence, such as firearms in videos, or flag unusual keywords in messages. |

| Pattern Recognition | AI can connect dots humans might miss—for example, linking phone numbers, financial transactions, or location data across cases. |

| Enhanced Searchability | AI tools create indexed, searchable case files, where investigators can type keywords and instantly find all related evidence. |

| Cost Savings | Less manual review time means lower costs for both police departments and prosecutors’ offices. |

| Fairness & Transparency (if properly applied) | AI can help track the chain of custody, ensuring evidence handling complies with legal standards. |

| Handling Large Data Sets | Especially in cybercrime, financial crime, and terrorism cases, the volume of digital evidence is too large for humans alone to process. |

To ensure AI-powered evidence review supports both order and justice, it’s critical that AI tools remain assistive rather than authoritative. Every piece of evidence flagged, prioritized, or excluded by AI should be subject to human verification—especially in cases involving high stakes like capital offenses or major civil disputes. Courts should also mandate disclosure requirements, ensuring all parties (including defense counsel) know when AI has been used to process or identify evidence. Additionally, AI systems should be trained on diverse, unbiased datasets to reduce the risk of certain types of evidence being systematically overlooked or over-prioritized. Finally, courts and investigative bodies must create clear appeal mechanisms, allowing defendants or litigants to challenge AI-derived evidence processing if they believe it introduced bias or error into the case.

Future Vision—A Legal System Shaped by AI

In a fully AI-enabled future, legal and judicial systems might:

- Pre-screen cases for settlement or trial eligibility using machine learning.

- Auto-generate sentencing ranges based on crime patterns, location, socioeconomic data, and public sentiment.

- Assign judges or mediators based on algorithmic matching of personality types and case categories.

- Constantly audit and refine legal rules based on real-time case data, ensuring laws adapt dynamically to social changes.

This could create:

- Hyper-efficient courts.

- Continuous system feedback and improvement.

- Legally optimized societies where laws are tested, evaluated, and revised by data—not politics.

But the risks remain. Will there be a loss of human judicial discretion? Could this reduce justice to a mathematical probability? Will automated legal systems treat citizens as data points, not rights-holders? The real test of future legal systems will be whether AI can serve justice—not just order—and whether we have the courage and the means to embed human values into AI tools, even when they reduce efficiency.

AI solutions for legal systems should be built with transparency, accountability, and bias mitigation at their core, helping governments, law enforcement agencies, courts, and correctional institutions harness the power of AI without compromising the human values that underpin justice. Whether you’re looking to enhance evidence review processes, streamline case management, or develop predictive analytics for smarter resource allocation, Allerin’s tailored AI solutions can help you achieve both order and justice in your legal systems. To learn more, visit allerin.com or contact us today.