Computer Vision That Works in the Real World.

Deployed at the Edge. Production-Ready in 8 Weeks.

Your cameras become intelligent observers. Your production line gets automated inspection. Your warehouse tracks every movement. And it all runs locally—fast, private, and reliable—without depending on cloud connectivity.

Computer Vision at the Edge: See Everything, Process Instantly

Computer vision gives machines the ability to see and understand visual information—detecting objects, reading text, identifying defects, tracking movement, and recognizing patterns that humans might miss or can't process fast enough.

Edge computer vision takes this further by running the AI directly on local hardware—cameras, embedded devices, or on-site servers—rather than sending video to the cloud.

Speed

Decisions in milliseconds, not seconds. A robotic arm needs to know where to pick. A safety system needs to detect hazards instantly. Cloud round-trips are too slow.

Reliability

Production can't stop because your internet flickered. Edge deployment keeps vision running even when connectivity fails.

Privacy

Your factory floor, your customer behavior, your operational data—processed locally, never leaving your premises.

Bandwidth

Streaming 4K video from dozens of cameras to the cloud is expensive and impractical. Process locally, send only insights.

The result: vision systems that operate in the real world with the speed, reliability, and privacy that production environments demand.

Computer Vision in Action

Object Detection

Real-time object detection identifying parts on production line with confidence scoring

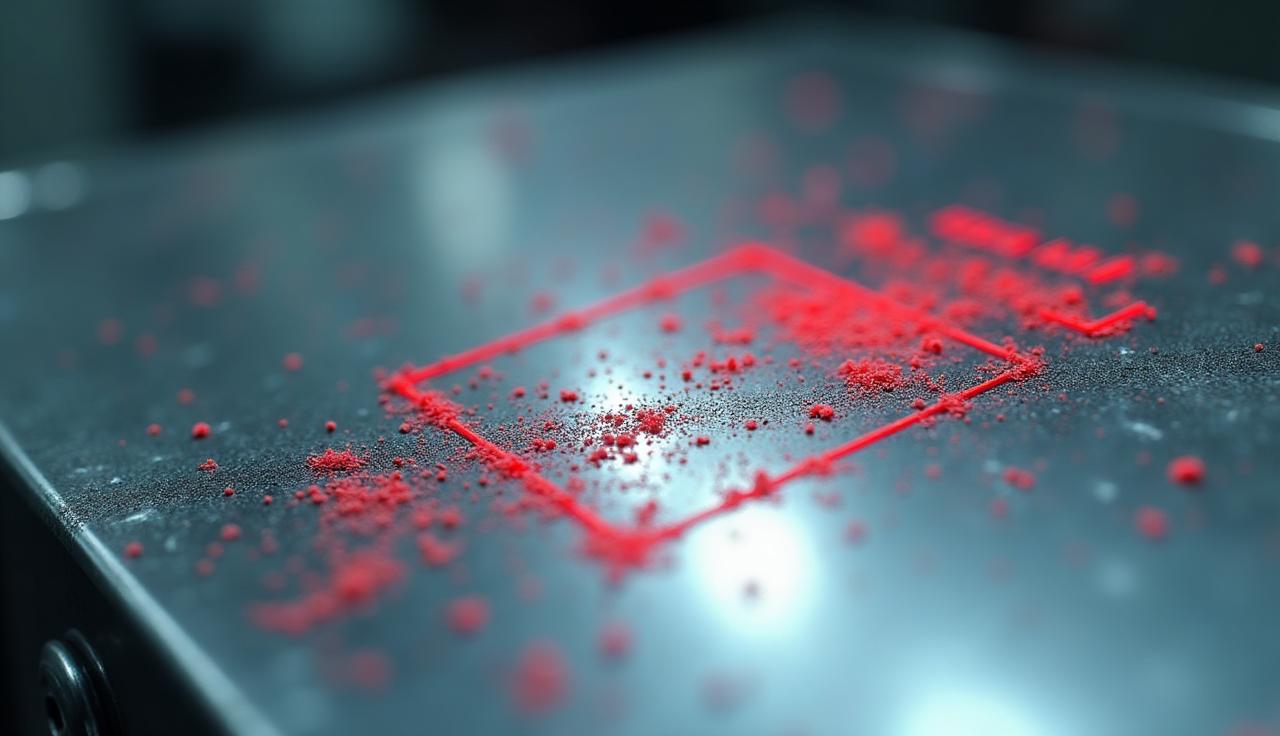

Defect Detection

Surface defect detection catching issues human inspectors miss

Safety Monitoring

Automated PPE compliance monitoring for hard hats, vests, and safety equipment

Inventory Counting

Accurate inventory counting without manual scanning

Analytics Dashboard

Real-time performance monitoring and drift detection

These examples represent the types of vision systems we deploy. Your implementation will be trained on your specific environment, products, and use case requirements.

Why Computer Vision Projects Fail to Reach Production

The industry has a dirty secret: most computer vision projects never make it out of the lab.

The Demo That Impressed Everyone

Your team trained a model that works beautifully on test images. Leadership gets excited. Then reality hits: the model falls apart on real factory lighting, unexpected angles, and edge cases.

The Cloud Dependency Trap

You built a solution that works—but only when connected to cloud infrastructure. Then your network has a hiccup, and production stops. Or you calculate streaming costs, and the business case evaporates.

The Latency Problem

Your quality inspection model is accurate—but takes 800ms per frame. At line speed, defective parts are downstream before results return. The vision system becomes reporting, not control.

The Hardware Mismatch

You trained on powerful cloud GPUs, but your edge device has a fraction of that compute. The model that worked in development doesn't fit on production hardware.

The "It Stopped Working" Mystery

Six months after deployment, accuracy has quietly degraded. Lighting changed. Products changed. Nothing tracks performance, so problems only surface when customers complain.

The Computer Vision FastTrack exists because we've seen these patterns destroy promising projects—and we've built a methodology to prevent them.

From Cameras to Intelligence in 8 Weeks

The Computer Vision FastTrack isn't a research project. It's a structured program that delivers production-ready vision systems—optimized for your hardware, integrated with your operations, and built with the monitoring to keep them working.

What You Get

Production Vision System

Models trained on your data, optimized for your hardware, deployed in your environment. Not a demo—a working system handling real visual data at production speeds.

Edge-Optimized Models

Neural networks tuned for target latency and frame rate on your specific hardware—whether Jetson, x86 with accelerators, or custom platforms.

MLOps Infrastructure

Automated pipelines for model versioning, A/B testing, and retraining. When performance drifts, you know—and you have tools to fix it.

Monitoring & Observability

Dashboards tracking inference latency, throughput, accuracy metrics, and drift indicators. Know HOW your system performs, not just that it's running.

Integration Layer

APIs and connectors linking vision outputs to your existing systems—PLCs, MES, WMS, ERP, or custom applications.

Operational Runbook

Documentation for monitoring, troubleshooting, scaling, and retraining. Your team can operate and evolve the system independently.

How We Deploy Production Computer Vision in 8 Weeks

Our accelerated timeline comes from parallel workstreams, production-proven components, and relentless focus on deployment—not research. Here's how the eight weeks break down:

Discovery & Data Foundation

We audit your visual environment—cameras, lighting, products, processes. In parallel, we establish data collection and begin building your training dataset.

Deliverables: Environment audit report, hardware recommendations, annotated training dataset

Model Development & Optimization

We train custom models on your data, then optimize aggressively for your target hardware. This isn't just accuracy—it's accuracy at target latency and frame rate.

Deliverables: Trained model, edge-optimized variants, performance benchmark report

Integration & Infrastructure

We deploy the optimized model to your edge hardware, build the inference pipeline, and integrate with your existing systems. MLOps infrastructure goes live.

Deliverables: Deployed inference system, MLOps pipelines operational, monitoring live

Validation & Production Launch

Production validation with real data, user training, and formal handoff. We ensure the system performs in actual operating conditions.

Deliverables: Production system live, trained operators, complete documentation, 30-day support begins

What Can You Build in 8 Weeks?

Computer vision transforms any process that currently relies on human eyes—or should have eyes but doesn't. Here's what organizations deploy with FastTrack:

Automated Visual Inspection

Detect defects that human inspectors miss or can't keep pace with. Surface scratches, assembly errors, missing components, dimensional variations—caught in real-time, every unit.

Industries: Manufacturing, Electronics, Pharma, Food Processing

Impact: Reduced escapes, consistent quality, inspection that scales

Object Detection & Counting

Know exactly what's present, where, and how many. Products on shelves, packages on conveyors, vehicles in lots, people in spaces—accurate counts without manual tallying.

Industries: Inventory, Warehouse, Retail, Traffic

Impact: Accurate inventory, automated counting, real-time visibility

Safety & Compliance Monitoring

Ensure PPE is worn, people stay out of hazard zones, safety protocols are followed—automatically, continuously, without dedicated observers.

Industries: Manufacturing, Construction, Warehouse, Labs

Impact: Reduced incidents, compliance documentation, proactive intervention

Robotic Guidance & Pick

Give robots the eyes they need. Locate parts for picking, guide assembly operations, enable navigation in dynamic environments.

Industries: Warehouse Automation, Assembly, Autonomous Vehicles

Impact: Reliable automation, handling variation, faster cycle times

Document & Text Recognition

Extract text from images, documents, labels, and signs automatically. Read shipping labels, capture form data, process invoices.

Industries: Logistics, Document Processing, License Plates

Impact: Eliminated data entry, faster processing, reduced errors

Behavior & Activity Analysis

Understand what's happening, not just what's present. Customer journeys, traffic flow, process adherence, equipment operation.

Industries: Retail Analytics, Security, Process Compliance

Impact: Operational insights, process optimization

Computer Vision for Every Industry and Scale

Manufacturing Operations

The Challenge

You need inspection that keeps pace with production, catches defects humans miss, and runs without network dependencies.

Our Approach

We deploy vision systems that integrate with your PLCs, MES, and existing automation—providing real-time quality data.

What You Get

Inspection at line speed, reduced escapes, quality data integrated with production systems.

The Right Hardware for Your Use Case

There's no one-size-fits-all for edge computer vision. The right hardware depends on your performance requirements, environment, power constraints, and budget. We help you navigate:

NVIDIA Jetson Family

The go-to platform for demanding vision workloads. From Jetson Nano (entry-level) to Orin (server-class performance in embedded form factor).

x86 + Accelerators

Standard servers or industrial PCs paired with inference accelerators (Intel OpenVINO, NVIDIA GPUs, specialized ASICs). Familiar infrastructure.

Specialized Edge Devices

Purpose-built inference hardware from vendors like Hailo, Coral, Intel Movidius. Optimized for specific performance/power/cost trade-offs.

Camera-Level Intelligence

Smart cameras with built-in inference capabilities. Processing happens in the camera itself—no separate compute needed.

Our Guidance

We help you select based on your actual requirements—not just what's newest or most powerful. Sometimes a $150 device is the right choice; sometimes you need $2,000 in compute.

Built for Production, Not Just Demos

Deploying a model is the beginning, not the end. Production computer vision requires operational infrastructure that most POCs completely ignore.

Model Versioning & Rollback

Track which model version is deployed where. When you need to update—or roll back—you can do it confidently across your fleet.

Performance Monitoring

Track inference latency, throughput, and resource utilization in real-time. Know when performance degrades before it affects operations.

Accuracy Drift Detection

Production environments change. Lighting shifts, products evolve. Drift detection alerts you when accuracy drops below thresholds—before you discover problems through escaped defects.

Automated Retraining Pipelines

When drift occurs, retraining workflows make it efficient to correct. New data flows into training, updated models validate, deployment happens with minimal manual work.

Fleet Management

Running vision on dozens or hundreds of devices? Centralized management for deploying updates, monitoring status, and troubleshooting across your entire deployment.

The Production Vision Lifecycle

Continuous improvement loop built into every deployment

Common Questions About Computer Vision FastTrack

Why FastTrack Succeeds Where Other Projects Stall

Edge-First Architecture

We don't build for the cloud and try to fit it on edge hardware. Every architecture decision assumes edge deployment from day one.

Production Intent from Day One

From project kickoff, we're building for production. Hardware selection, integration planning, and operational requirements are addressed in week one—not month six.

Hardware Expertise

We know the trade-offs between Jetson variants, between x86+GPU and specialized accelerators. Deployment experience informs practical recommendations.

MLOps Maturity

Drift detection, retraining pipelines, model versioning—these aren't add-ons. They're foundational infrastructure delivered with every deployment.

Latency and Throughput Guarantees

We optimize for YOUR performance requirements. If you need 30 FPS with <50ms latency, we design for that constraint—not just maximum accuracy.

Knowledge Transfer

We're building YOUR capability, not dependency. Every engagement includes documentation, training, and handoff so your team can operate independently.

Investment & Engagement Options

Discovery Sprint

Visual environment assessment, use case feasibility analysis, hardware recommendations, data requirements evaluation, ROI assessment.

Best For: Organizations exploring computer vision possibilities

FastTrack Standard

Single use case, 1-4 camera deployment, edge hardware provisioning, MLOps infrastructure, integration with one target system, 30-day support.

Best For: Organizations with clear use case ready for production

FastTrack Enterprise

Multiple use cases, multi-camera/multi-location, complex integrations, advanced MLOps, fleet management, extended support, team training.

Best For: Large-scale deployments or complex environments

Ready to Give Your Operations the Gift of Sight?

Start with a conversation. We'll discuss your visual challenges, assess feasibility, and tell you honestly whether FastTrack is the right approach for your situation.

At a Glance

Key Takeaways:

- •Computer Vision FastTrack deploys production edge AI in 4-8 weeks with <200ms latency and 96% accuracy

- •Includes custom model training, edge optimization (TensorRT/ONNX), and MLOps workflows

- •Supports Jetson, x86, ARM; cloud fallback for batch processing

- •Typical deployment: 96% accuracy, 120ms p95 latency, 87% reduction in manual review

Compatibility Matrix

Safety & Governance

Complete chain-of-custody for all annotations, approvals, and model updates with reason codes and timestamps.

Configurable retention windows (7-365 days) with cold tier archival. Supports SOC2, CJIS, and industry-specific requirements.

Automatic face blurring, license plate redaction, and configurable masking zones for privacy compliance (GDPR, CCPA).

Industry Deployment Patterns

How different industries use Computer Vision in production environments.

Manufacturing

Defect detection & assembly verification

Identify surface defects, misalignments, and missing components on production lines with 96%+ accuracy

Transportation & Rail

Track/ROW inspection & rolling stock monitoring

Automated detection of rail surface defects, vegetation encroachment, and equipment anomalies reducing manual inspection time by 87%

Warehousing & Logistics

Package counting, damage detection & compliance

Real-time package damage detection and volumetric counting with sub-second latency for high-throughput operations

Retail

Shelf compliance & queue management

Monitor product placement, planogram compliance, and customer queue depth for operational optimization

Architecture Decision Guide

Choosing the right deployment architecture for your Computer Vision system.

| Approach | When to Use | Tradeoffs | Best For |

|---|---|---|---|

| Edge-Only | On-prem requirements, no cloud connectivity, <100ms latency needed | Lower cost, data sovereignty, limited model size, manual updates | Government/DOT, utilities, remote sites |

| Hybrid Edge + Cloud | Real-time inference at edge + batch analytics in cloud | Best of both worlds, resilient to connectivity loss, moderate complexity | Manufacturing, retail, logistics |

| Cloud-Fallback | Primary cloud inference with edge backup during outages | Simpler edge footprint, higher latency, cloud dependency | Enterprise with reliable connectivity |

Procurement & RFP Readiness

Common requirements for Computer Vision vendor evaluation and compliance.

Need vendor compliance docs? Visit Trust Center →

When to Choose What

Computer Vision FastTrack builds visual detection models. For text-based AI features, consider GenAI Accelerator.

Computer Vision FastTrack

Best for visual detection/tracking/classification

- ✓Object detection and tracking (people, vehicles, defects)

- ✓Quality inspection and defect classification

- ✓Edge deployment with low-latency requirements

- ✓Real-time video stream analysis

Computer Vision Deployment Outcomes

See the math →- •Model meets precision/recall target on your footage

- •Edge pipeline ≤ target latency; stable FPS

- •Ops workflow (review, re-label, retrain) live

- •Measurable reduction in manual review time (typically 87%)

- •Drift monitoring and automated retraining triggers

What You Get: CV Pipeline Deliverables

Our standards →Industry Benchmarks & Performance

Representative performance metrics from typical Computer Vision deployments.*

*Representative industry examples based on typical deployments. Actual results vary by use case, data quality, infrastructure configuration, and deployment environment. See our methodology →

Hardware & Technology Compatibility

Proven deployment stack across edge devices, streaming protocols, and inference frameworks.

Edge Hardware

Inference Frameworks

Streaming Protocols

Model Formats

Integration Points

Timeline

4 weeks (PoC), 8 weeks (production-ready with MLOps)

Team

CV lead, MLE, edge dev, FE, QA; ops reviewer for deployment

Industry Benchmarks & Statistics

Based on 40+ edge CV deployments across manufacturing, warehousing, and retail operations.

Inputs We Need

- •Sample footage or image sets (200-500 frames minimum)

- •Labeling guidelines or existing annotations

- •Target edge hardware specs (Jetson/x86/ARM)

- •Precision/recall targets and acceptable latency

- •Ops workflow requirements (drift alerts, retraining triggers)

Tech & Deployment

Edge hardware: NVIDIA Jetson (Nano/Xavier/Orin), x86 (Intel/AMD), ARM (RPi/custom). Models: YOLOv8/v11, EfficientDet, custom CNNs; ONNX/TensorRT export; INT8 quantization. Frameworks: PyTorch/TensorFlow → ONNX; TensorRT optimization for 3-5x speedup. Streaming: RTSP/RTMP/USB; frame buffering and batching; resilient reconnect with backoff; timestamp sync with clock drift checks. Deployment: Docker/K3s on edge; REST/MQTT for results; centralized model registry. Edge management: heat throttling guard; GPU temp monitoring; rolling buffers with cold tier (S3/Blob) lifecycle rules. MLOps: Prometheus/Grafana for drift; Label Studio/CVAT for re-labeling; DVC for dataset versioning. Cloud fallback: AWS/GCP/Azure for heavy inference or batch processing.

Proof We Show

Full evidence list →Frequently Asked Questions

Need More Capabilities?

Explore related services that complement this offering.

Ready to Get Started?

Book a free 30-minute scoping call with a solution architect.

Procurement team? Visit Trust Center →